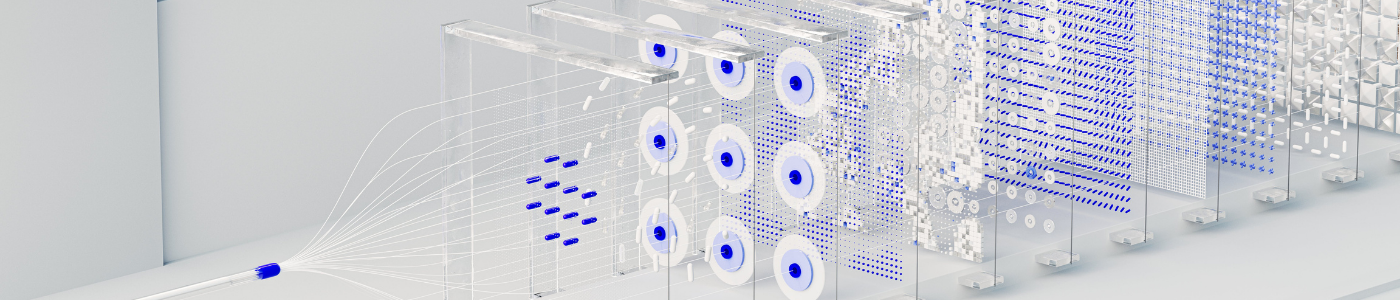

How attackers poison AI tools and defences

Barracuda has reported on how generative AI is being used to create and distribute spam emails and craft highly persuasive phishing attacks. These threats continue to evolve and escalate – but they are not the only ways in which attackers leverage AI.

Security researchers are now seeing threat actors manipulate companies' AI tools and tamper with their AI security features to steal and compromise information and weaken a target's defences.

Related Articles

- Predictable subscription, no hidden data charges - cut your SIEM tax

- Cybersecurity at a crossroads: Adapting to a world where breaches are inevitable

- Human Creativity vs AI Automation: Finding Balance in Web and App Development

- 10 signs your business could be vulnerable to a cyber attack

- Understanding your OT environment: the first step to stronger cyber security

.jpg)